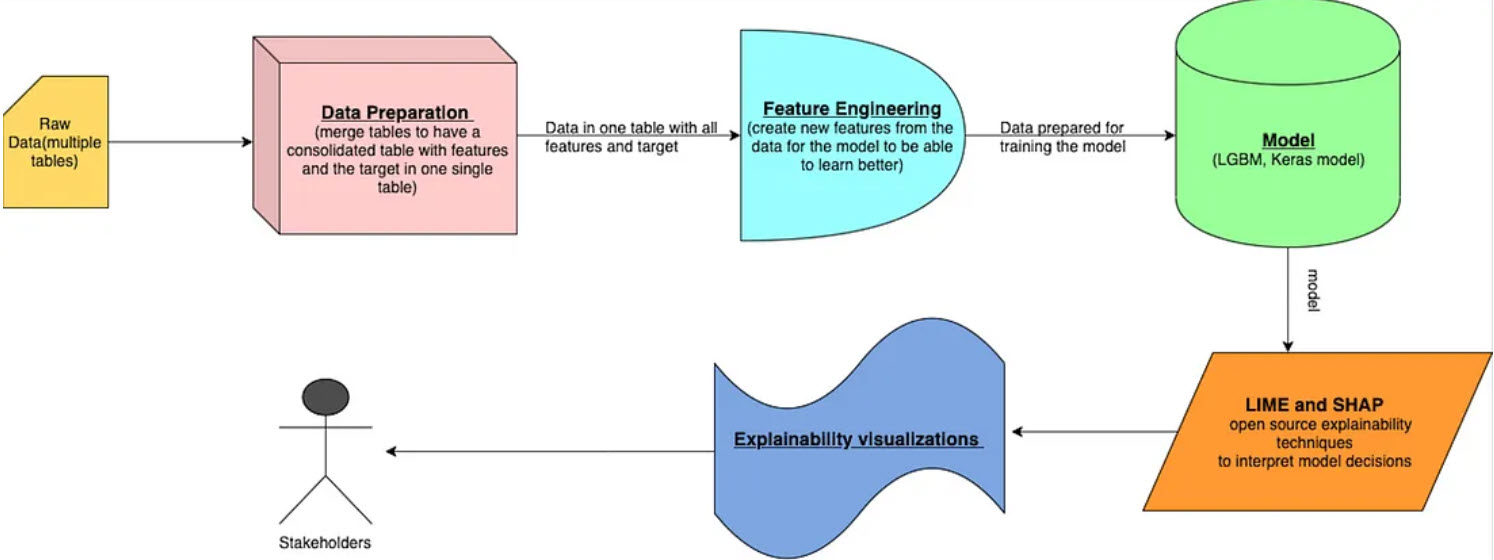

AI-Driven Low-Code/No-Code (LCNC) platforms are revolutionizing software development by enabling non-programmers to build applications. However, as AI integration within LCNC tools becomes more sophisticated, a significant challenge emerges: the lack of Explainability in AI-generated decisions. Without clear insights into how AI models operate, users may struggle to trust and adopt these technologies effectively. This study explores the intersection of Explainable AI (XAI) and LCNC platforms, addressing the critical need for transparency in AI-driven automation. I have analyzed existing XAI techniques—such as SHAP, LIME, and attention-based methods—and assessed their suitability for LCNC environments.

Additionally, I have proposed a layered explainability framework designed to cater to diverse user groups, including end-users, developers, and regulatory bodies. By enhancing AI interpretability within LCNC tools, I aim to bridge the trust gap, ensuring responsible AI adoption while maintaining ease of use. My findings provide a roadmap for industry practitioners and researchers seeking to develop more transparent, accountable, and user-friendly AI-driven LCNC platforms.

1. Understanding LCNC and AI Integration

A. Overview of LCNC Platforms and Their Benefits for Non-Programmers

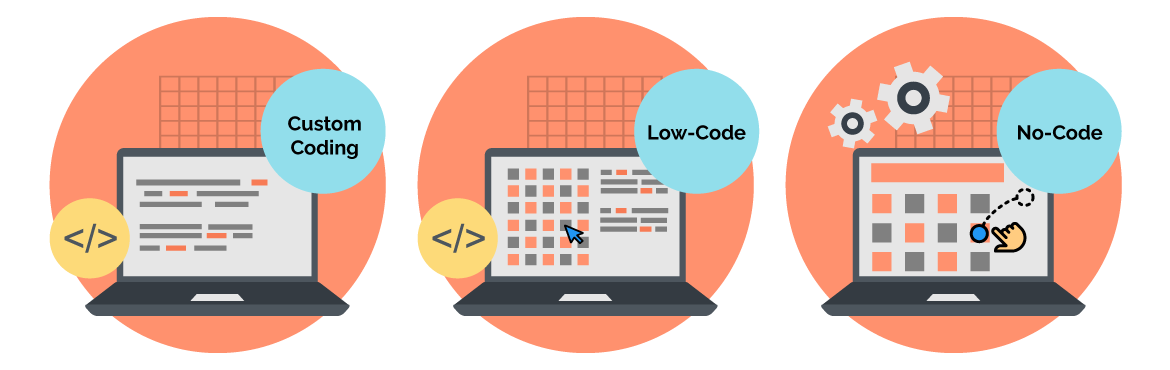

Low-Code/No-Code (LCNC) platforms have emerged as a revolutionary tool for democratizing software development. These platforms allow users with little to no programming knowledge to build applications using visual interfaces, drag-and-drop components, and pre-built functionalities. The primary benefits of LCNC platforms include:

- Accessibility: Individuals without coding experience can develop applications, reducing reliance on professional developers.

- Speed: LCNC accelerates the development process, allowing rapid prototyping and deployment of applications.

- Cost-Effectiveness: Organizations can reduce development costs by minimizing the need for extensive software engineering teams.

- Flexibility: Business users can tailor applications to their specific needs without waiting for IT teams.

- Scalability: Many LCNC platforms integrate cloud computing capabilities, ensuring that applications can scale as needed. As AI continues to become a core component of digital transformation, LCNC platforms are increasingly integrating AI capabilities to further enhance automation and decision-making.

B. Common AI-Driven Features in LCNC Tools

The AI-powered LCNC platforms provide a range of functionalities that make application development even more efficient. These include:

- Automated Decision-Making: AI models analyze data and generate insights to support business decisions without human intervention.

- Predictive Analytics: LCNC platforms can incorporate machine learning (ML) models that predict outcomes based on historical data.

- AI-Assisted Development: Natural Language Processing (NLP)-based tools allow users to describe desired functionality in plain language, and the platform generates corresponding code or workflows.

- Chatbots and Virtual Assistants: Pre-built AI models enable the creation of AI-driven chatbots and automation solutions with minimal coding.

- Intelligent Process Automation: AI enhances automation workflows by optimizing task execution based on real-time data analysis.

C. Challenges Faced by Users in Trusting AI-Generated Outputs

Despite the advantages of AI-powered LCNC tools, non-programmers often struggle with understanding and trusting AI-generated outcomes(Why it does, what it does?!) due to several challenges:

- Lack of Transparency: Many AI-driven LCNC tools function as black boxes, making it difficult for users to understand how decisions are made behind the scenes.

- Data Bias and Fairness Issues: AI models trained on biased datasets can produce unfair or inaccurate predictions, leading to skepticism about reliability.

- Over-Reliance on AI: Users may blindly trust AI-generated recommendations without validating their correctness.

- Difficulty in Debugging: Without AI expertise, non-programmers find it challenging to troubleshoot incorrect AI outputs or modify model parameters.

- Regulatory Concerns: Compliance with AI governance frameworks is complex, and users may struggle to ensure ethical AI implementation. These challenges highlight the growing need for Explainable AI (XAI) in LCNC platforms to bridge the trust gap.

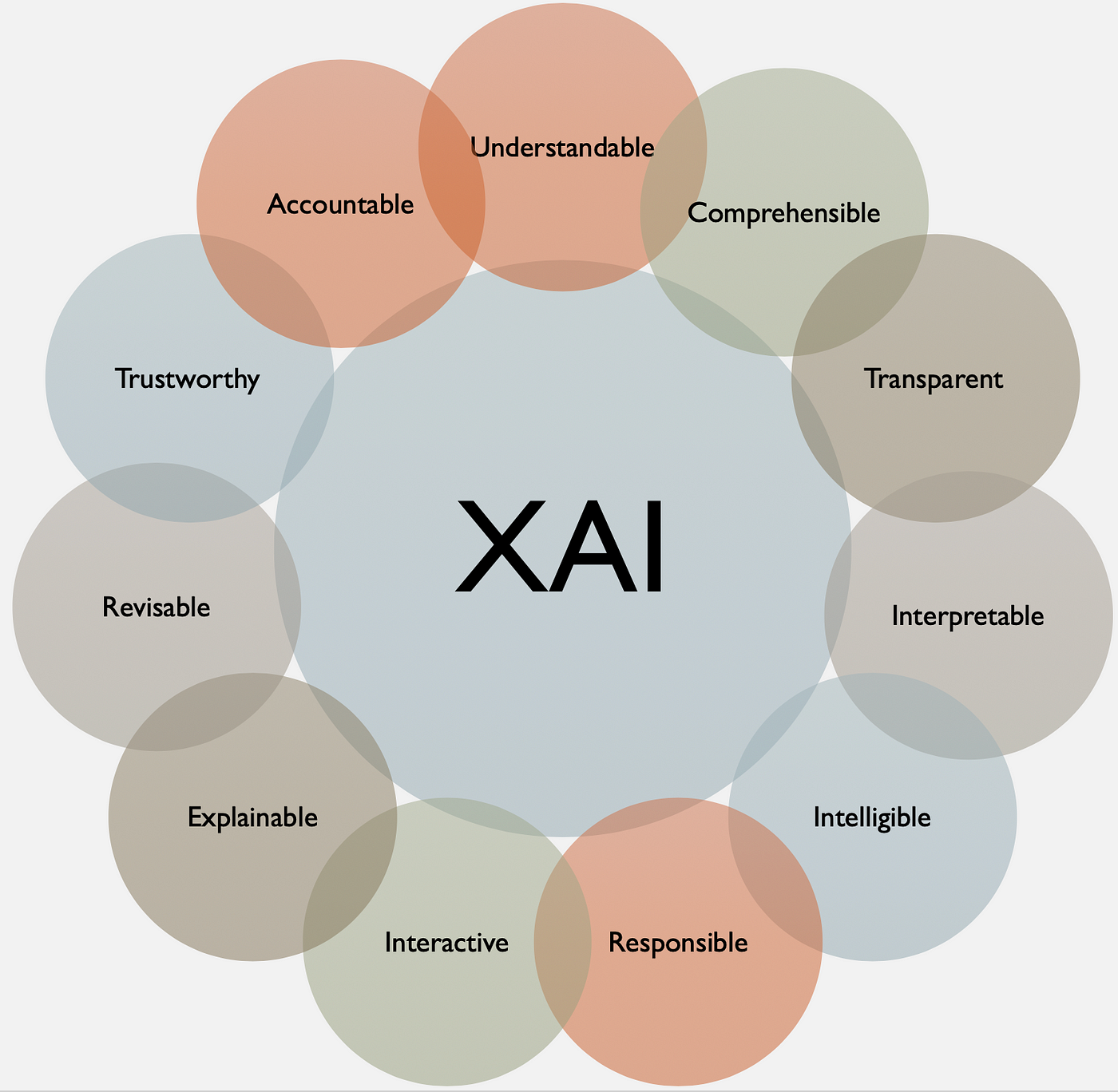

2. The Importance of Explainability in AI

A. Definition and Significance of Explainable AI (XAI)

Explainable AI (XAI) refers to AI systems designed to provide human-understandable explanations for their predictions and decisions. XAI is crucial for:

- Enhancing Trust: Users need to understand how AI reaches conclusions to confidently rely on its outputs.

- Ensuring Compliance: Regulatory frameworks, such as GDPR, require AI transparency in decision-making processes.

- Facilitating Debugging: Developers and users can identify errors and biases in AI models.

- Improving User Adoption: Transparent AI encourages widespread adoption among businesses and individuals.

B. Types of Explainability: Post-Hoc vs. Intrinsic Explainability

- Post-Hoc Explainability: Applied after the model has made a decision, using techniques like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations).

- Intrinsic Explainability: Built into AI models during training, where models such as decision trees and linear regression inherently provide understandable outputs.

C. Existing Methods (SHAP, LIME, Attention Mechanisms) and Their Limitations in LCNC Platforms

While post-hoc explainability techniques help interpret AI outputs, they face several limitations when applied in LCNC environments:

- SHAP and LIME: Require technical expertise, making them less accessible to non-programmers.

- Attention Mechanisms: Common in deep learning but may not fully address explainability for end-users.

- Model-Agnostic Methods: May not align well with LCNC’s visual interface constraints. These limitations necessitate a tailored approach to integrating XAI in LCNC platforms.

3. Challenges of Implementing XAI in LCNC!!!

-

Complexity vs. Usability Tradeoff in No-Code Interfaces LCNC platforms prioritize simplicity, but XAI methods often introduce complexity. The challenge lies in balancing explainability without overwhelming users with technical details.

-

Performance Concerns (Interpretable Models vs. Black-Box Models) More interpretable models, such as decision trees, may compromise performance compared to black-box models like deep learning networks.

-

Lack of User Expertise in AI Interpretability Methods Non-programmers may not understand interpretability techniques, limiting their ability to leverage XAI effectively.

4. Proposed Framework for Explainability in AI-Driven LCNC

A structured approach to integrating XAI in LCNC platforms can ensure transparency and usability. Since most LCNC users prioritize outcomes over AI explanations, explainability should be non-intrusive and actionable rather than complex. My proposed framework is categorized as follows:

A. User-Level Explainability(Minimal but Effective)

Some UX features include:

- Confidence Scores & Visual Cues: Displaying confidence levels (e.g., “90% match”) on AI-generated outputs so users can gauge reliability without diving into model details. For e.g.

🔹 High Confidence (80-100%) → Strong Recommendation

🟠 Medium Confidence (50-79%) → Proceed with Caution

🔻 Low Confidence (<50%) → Needs User Review/ Manual intervention

-

“Why This Suggestion?” Tooltips: Simple explanations like “Suggested because similar users preferred this layout” help users understand AI recommendations in a natural way.

-

Undo & Alternative Suggestions: Instead of justifying why an AI output is correct, allow users to reject, tweak, or choose alternatives—giving them control rather than explanations.

Example: In an AI-assisted form generator, showing “This field was auto-added based on similar templates” reassures users without overwhelming them with technical details.

B. Developer-Level Explainability(For Advanced Users)

Some LCNC platforms cater to semi-technical users (e.g., citizen developers, power users) who may want more control over AI behaviors. Explainability for this group should be interactive and optional, such as:

-

Feature Importance Insights: Let users see which factors influenced AI decisions (e.g., “Font color was chosen because of brand guidelines”).

-

Customizable AI Rules: Allow users to set constraints, modify weightage, or override AI decisions in a guided manner.

-

Debugging Tools: Interactive AI visualization tools (e.g., decision trees, LIME) for advanced users who want to understand or fine-tune AI behavior.

Example: In an AI-powered workflow automation tool, highlighting the key triggers (e.g., “This action was recommended because 80% of similar workflows follow this pattern”) makes debugging easier.

C. Regulatory & Compliance Explainability(For Enterprise & Legal Needs)

Organizations that use LCNC for critical applications (healthcare, finance, legal automation) may require auditable AI decisions for compliance and risk management. Explainability here should be passive (logs, reports) rather than intrusive.

-

AI Decision Logs: Auto-generate reports that track how AI models made decisions over time (e.g., why a loan was approved/rejected).

-

Bias & Fairness Analysis: Built-in fairness checks that alert users if AI-generated decisions show biases.

-

Exportable XAI Reports: Compliance teams can access AI explanations only when needed (rather than burdening regular users).

Example: In an AI-powered contract review LCNC tool, adding “Explain This Clause” for legal professionals ensures transparency without slowing down general users.

Winding up: Key Takeaway and Impact of Integrating Explainability in LCNC

By embedding explainability within LCNC platforms, we can:

- Empower non-programmers to make informed AI-driven decisions.

- Reduce AI bias and improve accountability in AI-powered automation.

- Enhance regulatory compliance and ensure ethical AI usage.

- Strengthen adoption of AI in industries like healthcare, finance, and education, where trust is paramount.

In conclusion, explainability in LCNC AI is not just a technical enhancement—it is a fundamental requirement for responsible and widespread AI adoption. A well-structured XAI framework will bridge the trust gap, democratizing AI development while ensuring transparency and fairness for all users.